Regression Analysis

General Idea:

In USA Drug Overdoes Analyzing, we made a comprehensive analysis for the crude death rate resulted from drug overdose in us. We compared the change of death rate for different states in different years. Here in regression analysis, our goal is trying to build a regression model which explains the variances of drug overdose death rate among different states. The possible predictors are from social economic index and behavior statistics of residents for fifty states and Washington D.C.. We only use drug overdose death rate from 2015 - 2019 to guarantee the data availability for possible predictors.

Possible Predictors

Poverty Rate: The poverty data is from United States Census Bureau. We imported and cleaned to get the poverty rate for fifty states and Washington D.C. from 2015 - 2019. Then, we take an average of the poverty rate for 5 years.

## load the poverty rate data from 2015-2019

poverty_2015 =

read_csv("data_regression_us/povety/poverty_us_2015.csv") %>%

select(NAME, S1701_C03_001E)

poverty_2015 =

poverty_2015[-1,] %>%

mutate(

poverty_population_2015 = as.numeric(S1701_C03_001E),

state = NAME

) %>%

select(-c(NAME, S1701_C03_001E)) %>%

relocate(state)

poverty_2016 =

read_csv("data_regression_us/povety/poverty_us_2016.csv") %>%

select(NAME, S1701_C03_001E)

poverty_2016 =

poverty_2016[-1,] %>%

mutate(

poverty_population_2016 = as.numeric(S1701_C03_001E),

state = NAME

) %>%

select(-c(NAME, S1701_C03_001E)) %>%

relocate(state)

poverty_2017 =

read_csv("data_regression_us/povety/poverty_us_2017.csv") %>%

select(NAME, S1701_C03_001E)

poverty_2017 =

poverty_2017[-1,] %>%

mutate(

poverty_population_2017 = as.numeric(S1701_C03_001E),

state = NAME

) %>%

select(-c(NAME, S1701_C03_001E)) %>%

relocate(state)

poverty_2018 =

read_csv("data_regression_us/povety/poverty_us_2018.csv") %>%

select(NAME, S1701_C03_001E)

poverty_2018 =

poverty_2018[-1,] %>%

mutate(

poverty_population_2018 = as.numeric(S1701_C03_001E),

state = NAME

) %>%

select(-c(NAME, S1701_C03_001E)) %>%

relocate(state)

poverty_2019 =

read_csv("data_regression_us/povety/poverty_us_2019.csv") %>%

select(NAME, S1701_C03_001E)

poverty_2019 =

poverty_2019[-1,] %>%

mutate(

poverty_population_2019 = as.numeric(S1701_C03_001E),

state = NAME

) %>%

select(-c(NAME, S1701_C03_001E)) %>%

relocate(state)

## combine the datasets, take average

poverty_overall =

join_all(list(poverty_2019, poverty_2018, poverty_2017, poverty_2016, poverty_2015))

poverty_overall =

poverty_overall %>%

mutate(sum_of_rows = rowSums((poverty_overall[,2:6]), na.rm = TRUE),

mean_poverty_rate = 0.01 * sum_of_rows/5) %>%

select(state, mean_poverty_rate)Population: The population data is from United States Census Bureau. We imported the population data for fifty states and Washington D.C. from 2020 United States census. Here we do not count population as a predictors. This data is only used to compute other predictors from available data.

population_us =

read_csv("data_regression_us/population/ACSDT5Y2019.B01003_data_with_overlays_2021-11-16T170855.csv")

population_us =

population_us[-1,] %>%

mutate(

state = NAME,

population = as.numeric(B01003_001E)

) %>%

select(state, population)Education Rate: The education data is from United States Census Bureau. We imported the data for fifty states and Washington D.C. from 2015 - 2019. It contains the number of population with different education level. We consider bachelor’s education as the threshold of “high education”. Therefore, we sum-up the number of people who has a bachelor or above degree. Then, we take an average of the number for 5 years. And the education rate can be calculated by dividing the high-educated population with the total population. Here education rate means that the proportion of population who receive bachelor’s or higher education.

## load the education data from 2015-2019

education_2015 =

read_csv("data_regression_us/education/education_us_2015.csv")

education_2015 =

education_2015[-1,] %>%

select(B15003_022E, B15003_023E, B15003_024E, B15003_025E, NAME) %>%

mutate(

number_bachelor = as.numeric(B15003_022E),

number_master = as.numeric(B15003_023E),

number_profesional = as.numeric(B15003_024E),

number_doctor = as.numeric(B15003_025E),

state = NAME

) %>%

select(state, number_bachelor, number_master, number_profesional, number_doctor) %>%

mutate(sum_high_educ_2015 = number_bachelor + number_master + number_profesional + number_doctor) %>%

select(state, sum_high_educ_2015)

education_2016 =

read_csv("data_regression_us/education/education_us_2016.csv")

education_2016 =

education_2016[-1,] %>%

select(B15003_022E, B15003_023E, B15003_024E, B15003_025E, NAME) %>%

mutate(

number_bachelor = as.numeric(B15003_022E),

number_master = as.numeric(B15003_023E),

number_profesional = as.numeric(B15003_024E),

number_doctor = as.numeric(B15003_025E),

state = NAME

) %>%

select(state, number_bachelor, number_master, number_profesional, number_doctor) %>%

mutate(sum_high_educ_2016 = number_bachelor + number_master + number_profesional + number_doctor) %>%

select(state, sum_high_educ_2016)

education_2017 =

read_csv("data_regression_us/education/education_us_2017.csv")

education_2017 =

education_2017[-1,] %>%

select(B15003_022E, B15003_023E, B15003_024E, B15003_025E, NAME) %>%

mutate(

number_bachelor = as.numeric(B15003_022E),

number_master = as.numeric(B15003_023E),

number_profesional = as.numeric(B15003_024E),

number_doctor = as.numeric(B15003_025E),

state = NAME

) %>%

select(state, number_bachelor, number_master, number_profesional, number_doctor) %>%

mutate(sum_high_educ_2017 = number_bachelor + number_master + number_profesional + number_doctor) %>%

select(state, sum_high_educ_2017)

education_2018 =

read_csv("data_regression_us/education/education_us_2018.csv")

education_2018 =

education_2018[-1,] %>%

select(B15003_022E, B15003_023E, B15003_024E, B15003_025E, NAME) %>%

mutate(

number_bachelor = as.numeric(B15003_022E),

number_master = as.numeric(B15003_023E),

number_profesional = as.numeric(B15003_024E),

number_doctor = as.numeric(B15003_025E),

state = NAME

) %>%

select(state, number_bachelor, number_master, number_profesional, number_doctor) %>%

mutate(sum_high_educ_2018 = number_bachelor + number_master + number_profesional + number_doctor) %>%

select(state, sum_high_educ_2018)

education_2019 =

read_csv("data_regression_us/education/education_us_2019.csv")

education_2019 =

education_2019[-1,] %>%

select(B15003_022E, B15003_023E, B15003_024E, B15003_025E, NAME) %>%

mutate(

number_bachelor = as.numeric(B15003_022E),

number_master = as.numeric(B15003_023E),

number_profesional = as.numeric(B15003_024E),

number_doctor = as.numeric(B15003_025E),

state = NAME

) %>%

select(state, number_bachelor, number_master, number_profesional, number_doctor) %>%

mutate(sum_high_educ_2019 = number_bachelor + number_master + number_profesional + number_doctor) %>%

select(state, sum_high_educ_2019)

## combine to produce overall education data, take average for 5 years

education_overall =

join_all(list(education_2019, education_2018, education_2017, education_2016, education_2015))

education_overall =

education_overall %>%

mutate(sum_of_rows = rowSums((education_overall[,2:6]), na.rm = TRUE),

mean_educ = sum_of_rows/5) %>%

select(state, mean_educ)

## compute education rate

education_rate =

left_join(education_overall, population_us) %>%

mutate(education_rate = mean_educ / population) %>%

select(state, education_rate)Unemployment Rate: The unemployment data is from United States Census Bureau. We imported and cleaned to get the unemployment rate for fifty states and Washington D.C. from 2015 - 2019. Then, we take an average of the unemployment rate for 5 years.

## load the unemployment 2015-2019

unemployment_2015 =

read_csv("data_regression_us/unemployment/unemployment_2015.csv")

unemployment_2015 =

unemployment_2015[-1,] %>%

select(NAME, S2301_C04_001E) %>%

mutate(

unemployment_rate_2015 = as.numeric(S2301_C04_001E),

state = NAME

) %>%

select(state, unemployment_rate_2015)

unemployment_2016 =

read_csv("data_regression_us/unemployment/unemployment_2016.csv")

unemployment_2016 =

unemployment_2016[-1,] %>%

select(NAME, S2301_C04_001E) %>%

mutate(

unemployment_rate_2016 = as.numeric(S2301_C04_001E),

state = NAME

) %>%

select(state, unemployment_rate_2016)

unemployment_2017 =

read_csv("data_regression_us/unemployment/unemployment_2017.csv")

unemployment_2017 =

unemployment_2017[-1,] %>%

select(NAME, S2301_C04_001E) %>%

mutate(

unemployment_rate_2017 = as.numeric(S2301_C04_001E),

state = NAME

) %>%

select(state, unemployment_rate_2017)

unemployment_2018 =

read_csv("data_regression_us/unemployment/unemployment_2018.csv")

unemployment_2018 =

unemployment_2018[-1,] %>%

select(NAME, S2301_C04_001E) %>%

mutate(

unemployment_rate_2018 = as.numeric(S2301_C04_001E),

state = NAME

) %>%

select(state, unemployment_rate_2018)

unemployment_2019 =

read_csv("data_regression_us/unemployment/unemployment_2019.csv")

unemployment_2019 =

unemployment_2019[-1,] %>%

select(NAME, S2301_C04_001E) %>%

mutate(

unemployment_rate_2019 = as.numeric(S2301_C04_001E),

state = NAME

) %>%

select(state, unemployment_rate_2019)

## combine to overall unemployment df, take average of unemployment rate for 5 years

unemployment_overall =

join_all(list(unemployment_2019, unemployment_2018, unemployment_2017, unemployment_2016, unemployment_2015))

unemployment_overall =

unemployment_overall %>%

mutate(sum_of_rows = rowSums((unemployment_overall[,2:6]), na.rm = TRUE),

mean_unemployment_rate = 0.01 * sum_of_rows/5) %>%

select(state, mean_unemployment_rate)Crude Divorce Rate: The divorce data is from United States Census Bureau. We imported the data for fifty states and Washington D.C. from 2015 - 2019. It contains the number of population who divorce with their partners. We take an average of the number for 5 years. And the crude divorce rate can be calculated by dividing the divorced population with the total population.

## load the divorce count data from 2015-2019

divorce_2015 =

read_csv("data_regression_us/divorce/divorce_number_2015.csv")

divorce_2015 =

divorce_2015[-1,] %>%

select(NAME, B12503_004E, B12503_009E) %>%

mutate(

B12503_004E = as.numeric(B12503_004E),

B12503_009E = as.numeric(B12503_009E),

num_divorce = B12503_004E + B12503_009E,

divorce_num_2015 = as.numeric(num_divorce),

state = NAME

) %>%

select(state, divorce_num_2015)

divorce_2016 =

read_csv("data_regression_us/divorce/divorce_number_2016.csv")

divorce_2016 =

divorce_2016[-1,] %>%

select(NAME, B12503_004E, B12503_009E) %>%

mutate(

B12503_004E = as.numeric(B12503_004E),

B12503_009E = as.numeric(B12503_009E),

num_divorce = B12503_004E + B12503_009E,

divorce_num_2016 = as.numeric(num_divorce),

state = NAME

) %>%

select(state, divorce_num_2016)

divorce_2017 =

read_csv("data_regression_us/divorce/divorce_number_2017.csv")

divorce_2017 =

divorce_2017[-1,] %>%

select(NAME, B12503_004E, B12503_009E) %>%

mutate(

B12503_004E = as.numeric(B12503_004E),

B12503_009E = as.numeric(B12503_009E),

num_divorce = B12503_004E + B12503_009E,

divorce_num_2017 = as.numeric(num_divorce),

state = NAME

) %>%

select(state, divorce_num_2017)

divorce_2018 =

read_csv("data_regression_us/divorce/divorce_number_2018.csv")

divorce_2018 =

divorce_2018[-1,] %>%

select(NAME, B12503_004E, B12503_009E) %>%

mutate(

B12503_004E = as.numeric(B12503_004E),

B12503_009E = as.numeric(B12503_009E),

num_divorce = B12503_004E + B12503_009E,

divorce_num_2018 = as.numeric(num_divorce),

state = NAME

) %>%

select(state, divorce_num_2018)

divorce_2019 =

read_csv("data_regression_us/divorce/divorce_number_2019.csv")

divorce_2019 =

divorce_2019[-1,] %>%

select(NAME, B12503_004E, B12503_009E) %>%

mutate(

B12503_004E = as.numeric(B12503_004E),

B12503_009E = as.numeric(B12503_009E),

num_divorce = B12503_004E + B12503_009E,

divorce_num_2019 = as.numeric(num_divorce),

state = NAME

) %>%

select(state, divorce_num_2019)

## combine 5 df to a overall divorce count df, take average for 5 years

divorce_overall =

join_all(list(divorce_2019, divorce_2018, divorce_2017, divorce_2016, divorce_2015))

divorce_overall =

divorce_overall %>%

mutate(sum_of_rows = rowSums((divorce_overall[,2:6]), na.rm = TRUE),

mean_divorce_num = sum_of_rows/5) %>%

select(state, mean_divorce_num)

## compute crude divorce rate

divorce_rate =

left_join(divorce_overall, population_us) %>%

mutate(divorce_rate = mean_divorce_num / population) %>%

select(state, divorce_rate)Smoke Rate: The smoke rate data is from America’s Health Rankings. The smoke rate is defined as “the percentage of adults who reported smoking at least 100 cigarettes in their lifetime and currently smoke daily or some days”. We imported and clean to get the smoke rate for fifty states and Washington D.C. from 2015 - 2019. Then, we take an average of the smoke rate for 5 years.

smoke_rate =

read_excel("data_regression_us/smoke/smoke_rate.xlsx")

smoke_rate =

smoke_rate %>%

mutate(sum_of_rows = rowSums((smoke_rate[,2:6]), na.rm = TRUE),

mean_smoke_rate = sum_of_rows/5) %>%

select(state, mean_smoke_rate)Binge Drinking: Binge drinking data is collected from Statista. Binge drinking prevalence for the 51 jurisdictions (50 states and Washington D.C.). The prevalence of binge drinking is defined as number of binge drinker over the whole population within each jurisdiction.

state_level = c(state.name[1:8], "District of Columbia", state.name[9:50])

drinking_19 =

read_excel("./data/statistic_id378966_us-binge-drinking-among-adults-by-state-2019.xlsx", range = "Data!B3:C57") %>%

janitor::clean_names() %>%

slice(-c(1,2)) %>%

mutate(binge_drink_rate = 0.01 * as.numeric(x2)) %>%

select(state = u_s_binge_drinking_among_adults_by_state_2019, binge_drink_rate)Crude Death Rate: Mentioned in the USA overview section as well. In a few words, it is the number of deaths due to drug overdose per 100,000 people.

crudedeath_rate =

read.csv("./data/agegroup_race_state_year_99-19.csv") %>%

janitor::clean_names() %>%

select(state, year = year_code, age = ten_year_age_groups_code, race, deaths,population) %>%

drop_na() %>%

filter(year %in% c("2015", "2016", "2017", "2018", "2019")) %>%

group_by(state) %>%

dplyr::summarize(total_deaths = sum(deaths),

total_population = sum(population)) %>%

mutate(crudedeath_rate = 0.01 * (total_deaths/total_population) * 100000) %>%

select(state, crudedeath_rate)Regression Analyses

Regression Overall Data

The regression overall data is produced by combining the dataset generated above. For each state, this data contains the response – the crude death rate for drug overdose and all possible predictors – binge drinking rate, divorce rate, unemployment rate, education rate, poverty rate, and smoke rate. The following tables describe this data:

overall_regression_view =

join_all(list(crudedeath_rate, drinking_19, divorce_rate, unemployment_overall, education_rate, poverty_overall, smoke_rate))

overall_regression =

overall_regression_view %>%

select(-state)

overall_regression_view %>% head(10) %>% knitr::kable(caption = "The First 10 Rows of Regression Overall Data")| state | crudedeath_rate | binge_drink_rate | divorce_rate | mean_unemployment_rate | education_rate | mean_poverty_rate | mean_smoke_rate |

|---|---|---|---|---|---|---|---|

| Alabama | 0.2270304 | 0.136 | 0.5606409 | 0.0598 | 0.1718737 | 0.1696 | 0.2082 |

| Alaska | 0.2663021 | 0.182 | 0.5173449 | 0.0722 | 0.1932690 | 0.1046 | 0.1962 |

| Arizona | 0.2960310 | 0.165 | 0.5365614 | 0.0594 | 0.1959374 | 0.1524 | 0.1496 |

| Arkansas | 0.2089063 | 0.149 | 0.5776714 | 0.0516 | 0.1530968 | 0.1722 | 0.2364 |

| California | 0.1640786 | 0.183 | 0.5099043 | 0.0606 | 0.2273319 | 0.1350 | 0.1160 |

| Colorado | 0.2309491 | 0.196 | 0.5524384 | 0.0434 | 0.2791094 | 0.1034 | 0.1520 |

| Connecticut | 0.4042932 | 0.177 | 0.5388083 | 0.0604 | 0.2712121 | 0.1006 | 0.1342 |

| Delaware | 0.6017141 | 0.189 | 0.4362088 | 0.0542 | 0.2215250 | 0.1230 | 0.1770 |

| District of Columbia | 0.8842317 | 0.272 | 0.3632256 | 0.0692 | 0.4124074 | 0.1644 | 0.1508 |

| Florida | 0.2746527 | 0.180 | 0.5690009 | 0.0564 | 0.2120040 | 0.1414 | 0.1590 |

summary(overall_regression_view) %>% knitr::kable(caption = "Summary of The Response and The Predictors")| state | crudedeath_rate | binge_drink_rate | divorce_rate | mean_unemployment_rate | education_rate | mean_poverty_rate | mean_smoke_rate | |

|---|---|---|---|---|---|---|---|---|

| Length:51 | Min. :0.1182 | Min. :0.1200 | Min. :0.3632 | Min. :0.02740 | Min. :0.1449 | Min. :0.0762 | Min. :0.0910 | |

| Class :character | 1st Qu.:0.2057 | 1st Qu.:0.1653 | 1st Qu.:0.5256 | 1st Qu.:0.04270 | 1st Qu.:0.1873 | 1st Qu.:0.1092 | 1st Qu.:0.1511 | |

| Mode :character | Median :0.2710 | Median :0.1865 | Median :0.5497 | Median :0.05320 | Median :0.2061 | Median :0.1306 | Median :0.1718 | |

| NA | Mean :0.3015 | Mean :0.1858 | Mean :0.5441 | Mean :0.05128 | Mean :0.2153 | Mean :0.1321 | Mean :0.1752 | |

| NA | 3rd Qu.:0.3561 | 3rd Qu.:0.2010 | 3rd Qu.:0.5649 | 3rd Qu.:0.05900 | 3rd Qu.:0.2357 | 3rd Qu.:0.1502 | 3rd Qu.:0.1961 | |

| NA | Max. :0.8842 | Max. :0.2720 | Max. :0.6018 | Max. :0.07460 | Max. :0.4124 | Max. :0.2038 | Max. :0.2568 | |

| NA | NA | NA’s :1 | NA | NA | NA | NA | NA |

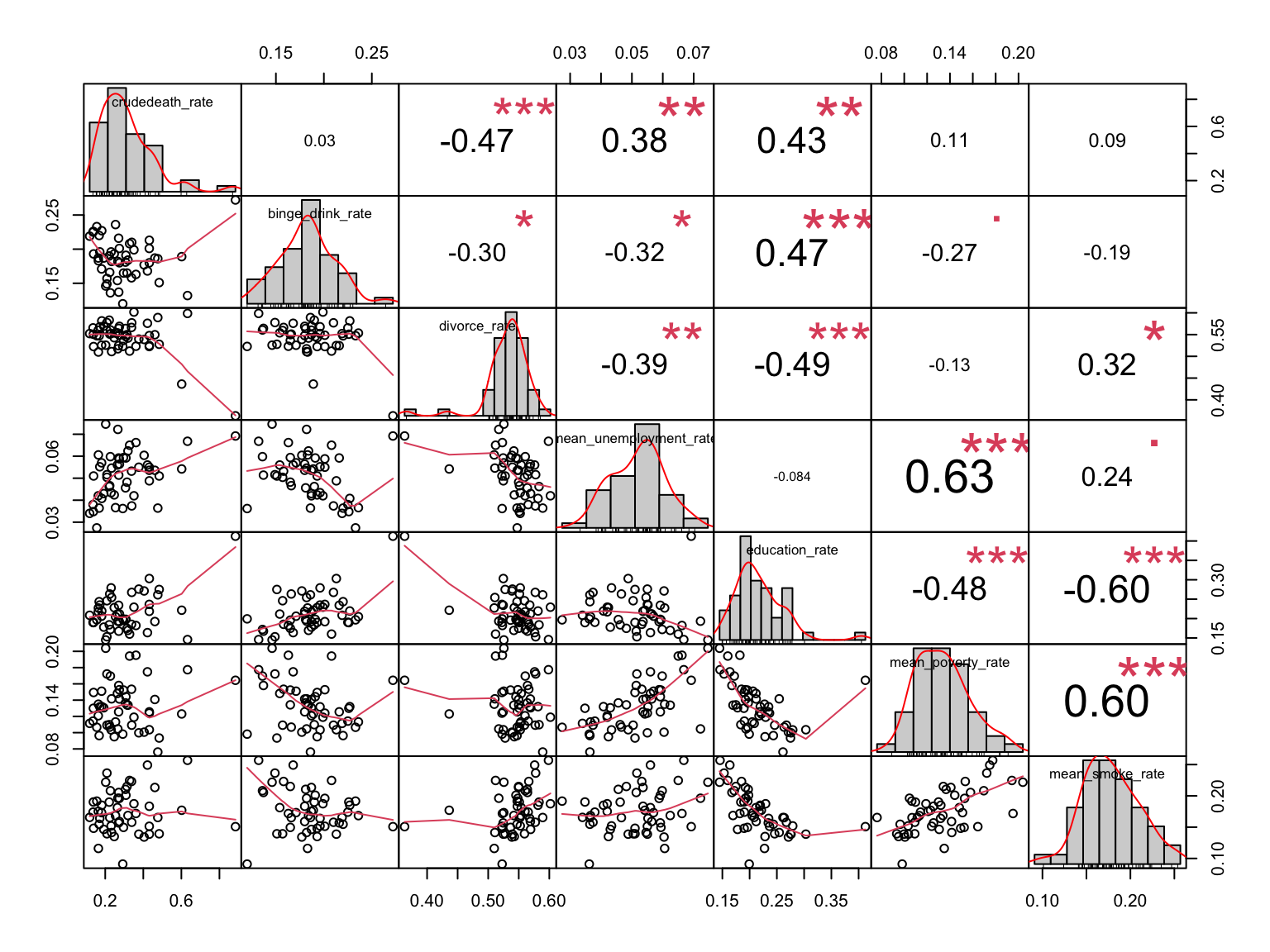

Correlation Matrix

corr_matrix =

overall_regression %>%

chart.Correlation(histogram = TRUE, method = "pearson")

Comments:

For those variables that are strongly correlated (***) with each other, we add their interaction into the regression model for further analysis.

Two-ways interaction we consider:

binge_drink_rate * education_rate

divorce_rate * education_rate

mean_unemployment_rate * mean_poverty_rate

education_rate * mean_poverty_rate

education_rate * mean_smoke_rate

mean_poverty_rate * mean_smoke_rate

Regression Modeling

Method:

The initial full model for crude death rate consists of all possible predictors and reasonable interaction.

Model_full: crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate + binge_drink_rate * education_rate + mean_unemployment_rate * mean_poverty_rate + education_rate * mean_poverty_rate + education_rate * mean_smoke_rate + mean_poverty_rate * mean_smoke_rate + divorce_rate * education_rate

Here we are going to use two modeling method and try to generate a better regression model to explain crude death rate.

Stepwise Modeling

stepwise =

step(lm(crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate + binge_drink_rate * education_rate + mean_unemployment_rate * mean_poverty_rate + education_rate * mean_poverty_rate + education_rate * mean_smoke_rate + mean_poverty_rate * mean_smoke_rate + divorce_rate * education_rate, data = overall_regression),direction = "both", trace = 0)Based on the stepwise results, the best model is model_1.

Model_1: crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate + mean_poverty_rate:mean_smoke_rate + divorce_rate:education_rate

This model can be described in the following parameter:

summary(stepwise) %>% broom::tidy() %>% knitr::kable()| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | -0.1823514 | 0.7988419 | -0.2282697 | 0.8205712 |

| binge_drink_rate | -0.9643137 | 0.6718818 | -1.4352432 | 0.1588030 |

| divorce_rate | 1.1058355 | 1.4478464 | 0.7637795 | 0.4493718 |

| mean_unemployment_rate | 3.1693281 | 2.1249877 | 1.4914571 | 0.1434962 |

| education_rate | 6.4472365 | 2.3587499 | 2.7333277 | 0.0092123 |

| mean_poverty_rate | -5.7853504 | 3.0285863 | -1.9102478 | 0.0631129 |

| mean_smoke_rate | -1.0370218 | 2.3260266 | -0.4458340 | 0.6580627 |

| mean_poverty_rate:mean_smoke_rate | 23.4829679 | 15.8225164 | 1.4841488 | 0.1454173 |

| divorce_rate:education_rate | -9.4598001 | 4.9459427 | -1.9126384 | 0.0627972 |

summary(stepwise) %>% broom::glance() %>% knitr::kable()| r.squared | adj.r.squared | sigma | statistic | p.value | df | df.residual | nobs |

|---|---|---|---|---|---|---|---|

| 0.6163078 | 0.541441 | 0.0981217 | 8.23206 | 1.4e-06 | 8 | 41 | 50 |

Regsubset() Compare All Possible Models

reg_subsets =

regsubsets(crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate + binge_drink_rate * education_rate + mean_unemployment_rate * mean_poverty_rate + education_rate * mean_poverty_rate + education_rate * mean_smoke_rate + mean_poverty_rate * mean_smoke_rate + divorce_rate * education_rate, data = overall_regression, nvmax = 12)

summary(reg_subsets)Method: This table describes the different statistics value for different number of predictors the model contains. For each number, regsubset() produces the best model and outputs the statistics in the following.

cri_measure =

cbind(

cp = summary(reg_subsets)$cp,

r2 = summary(reg_subsets)$rsq,

adj_r2 = summary(reg_subsets)$adjr2,

BIC = summary(reg_subsets)$bic

)

cri_measure## cp r2 adj_r2 BIC

## [1,] 24.568548 0.3055733 0.2911061 -10.4093868

## [2,] 14.151243 0.4277652 0.4034148 -16.1742284

## [3,] 6.317893 0.5245299 0.4935210 -21.5244707

## [4,] 3.981957 0.5671975 0.5287261 -22.3135710

## [5,] 3.236676 0.5942123 0.5481001 -21.6241191

## [6,] 3.301372 0.6132566 0.5592924 -20.1155275

## [7,] 4.689757 0.6192751 0.5558210 -16.9877323

## [8,] 6.326699 0.6228478 0.5492571 -13.5471160

## [9,] 7.034735 0.6355613 0.5535626 -11.3496172

## [10,] 9.007389 0.6358304 0.5424536 -7.4745279

## [11,] 11.001814 0.6358853 0.5304836 -3.5700381

## [12,] 13.000000 0.6359031 0.5178176 0.3395343Based on cp, the “best” model contains five predictors.

Model_2: crudedeath_rate ~ binge_drink_rate + education_rate + mean_poverty_rate + mean_poverty_rate:mean_smoke_rate + divorce_rate:education_rate

This model can be described in the following parameter:

model_2 = lm(data = overall_regression, formula = crudedeath_rate ~ binge_drink_rate + education_rate + mean_poverty_rate + mean_poverty_rate:mean_smoke_rate + divorce_rate:education_rate)

summary(model_2) %>% broom::tidy() %>% knitr::kable()| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | 0.3219639 | 0.2096655 | 1.535607 | 0.1317951 |

| binge_drink_rate | -1.4348310 | 0.5377575 | -2.668175 | 0.0106366 |

| education_rate | 5.2963056 | 0.7760553 | 6.824650 | 0.0000000 |

| mean_poverty_rate | -4.1019627 | 1.2793286 | -3.206340 | 0.0025056 |

| mean_poverty_rate:mean_smoke_rate | 18.0807105 | 4.0391521 | 4.476363 | 0.0000532 |

| education_rate:divorce_rate | -6.7196736 | 1.8057116 | -3.721344 | 0.0005592 |

summary(model_2) %>% broom::glance() %>% knitr::kable()| r.squared | adj.r.squared | sigma | statistic | p.value | df | df.residual | nobs |

|---|---|---|---|---|---|---|---|

| 0.5942123 | 0.5481001 | 0.0974067 | 12.88622 | 1e-07 | 5 | 44 | 50 |

Based on adjusted r square, we get model_3 as the “best” model.

Model_3: crudedeath_rate ~ binge_drink_rate + education_rate + mean_poverty_rate + mean_poverty_rate:mean_smoke_rate + divorce_rate:education_rate + mean_unemployment_rate:mean_poverty_rate

This model can be described in the following parameter:

model_3 = lm(data = overall_regression, formula = crudedeath_rate ~ binge_drink_rate + education_rate + mean_poverty_rate + mean_poverty_rate:mean_smoke_rate + divorce_rate:education_rate + mean_unemployment_rate:mean_poverty_rate)

summary(model_3) %>% broom::tidy() %>% knitr::kable()| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | 0.3801708 | 0.2108814 | 1.802771 | 0.0784354 |

| binge_drink_rate | -1.0853390 | 0.5828431 | -1.862146 | 0.0694250 |

| education_rate | 4.7397334 | 0.8565289 | 5.533653 | 0.0000017 |

| mean_poverty_rate | -5.9904343 | 1.8111909 | -3.307456 | 0.0019082 |

| mean_poverty_rate:mean_smoke_rate | 17.9418971 | 3.9899597 | 4.496761 | 0.0000516 |

| education_rate:divorce_rate | -5.9249642 | 1.8649682 | -3.176979 | 0.0027542 |

| mean_poverty_rate:mean_unemployment_rate | 22.6088897 | 15.5372534 | 1.455141 | 0.1528923 |

summary(model_3) %>% broom::glance() %>% knitr::kable()| r.squared | adj.r.squared | sigma | statistic | p.value | df | df.residual | nobs |

|---|---|---|---|---|---|---|---|

| 0.6132566 | 0.5592924 | 0.0961929 | 11.36414 | 1e-07 | 6 | 43 | 50 |

Based on BIC, we get model_4 as the “best” model.

Model_4: crudedeath_rate ~ binge_drink_rate + education_rate + mean_smoke_rate + divorce_rate:education_rate

This model can be described in the following parameter:

model_4 = lm(data = overall_regression, formula = crudedeath_rate ~ binge_drink_rate + education_rate + mean_smoke_rate + divorce_rate:education_rate)

summary(model_4) %>% broom::tidy() %>% knitr::kable()| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | -0.214375 | 0.1768821 | -1.211965 | 0.2318510 |

| binge_drink_rate | -1.565518 | 0.5413276 | -2.891997 | 0.0058775 |

| education_rate | 4.874933 | 0.6976179 | 6.987970 | 0.0000000 |

| mean_smoke_rate | 2.212329 | 0.5296644 | 4.176850 | 0.0001339 |

| education_rate:divorce_rate | -5.427036 | 1.4483395 | -3.747074 | 0.0005074 |

summary(model_4) %>% broom::glance() %>% knitr::kable()| r.squared | adj.r.squared | sigma | statistic | p.value | df | df.residual | nobs |

|---|---|---|---|---|---|---|---|

| 0.5671975 | 0.5287261 | 0.0994728 | 14.74338 | 1e-07 | 4 | 45 | 50 |

Regression Modeling without Interaction

Method:

The initial full model for crude death rate consists of all possible predictors.

Model_full: crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate

Here we are going to use two modeling method and try to generate a better regression model to explain crude death rate.

Stepwise Modeling

stepwise_no_inter =

step(lm(crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate, data = overall_regression),direction = "both", trace = 0)Based on the stepwise results, the best model is model_1.

Model_5: crudedeath_rate ~ binge_drink_rate + divorce_rate + education_rate + mean_smoke_rate

Comment:

Since the model from regsubset() without considering the interaction is the same as model_5, we will not discuss the details of that method here.

This model can be described in the following parameter:

summary(stepwise_no_inter) %>% broom::tidy() %>% knitr::kable()| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | 0.4646221 | 0.3226392 | 1.440067 | 0.1567697 |

| binge_drink_rate | -1.5395071 | 0.5520224 | -2.788849 | 0.0077258 |

| divorce_rate | -1.5061409 | 0.4402422 | -3.421165 | 0.0013370 |

| education_rate | 2.2751208 | 0.4591017 | 4.955592 | 0.0000107 |

| mean_smoke_rate | 2.5809509 | 0.5356159 | 4.818660 | 0.0000168 |

summary(stepwise_no_inter) %>% broom::glance() %>% knitr::kable()| r.squared | adj.r.squared | sigma | statistic | p.value | df | df.residual | nobs |

|---|---|---|---|---|---|---|---|

| 0.5493662 | 0.5093098 | 0.1015012 | 13.71484 | 2e-07 | 4 | 45 | 50 |

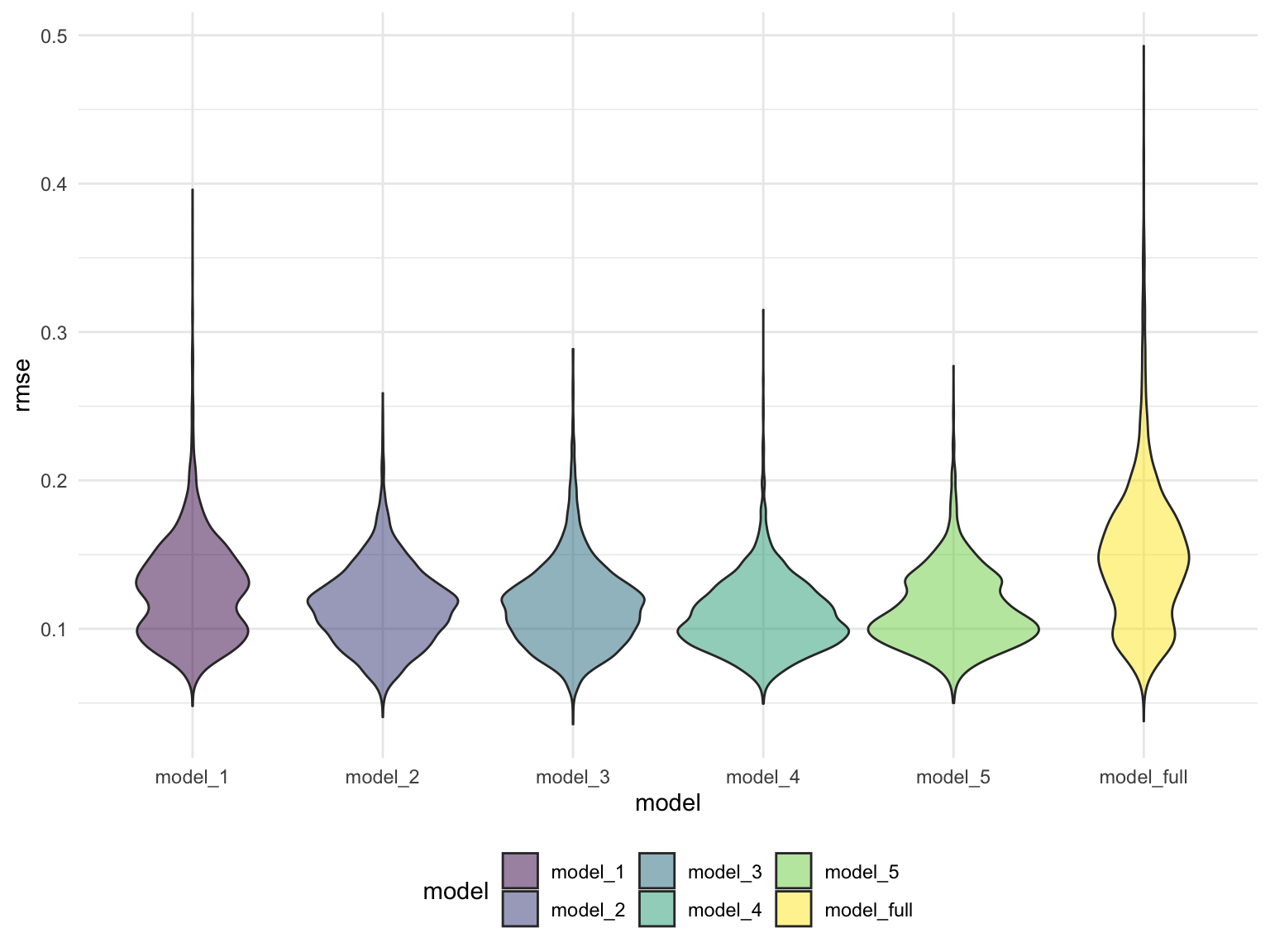

Cross Validation

We now have five candidates as considered possible “best” model for drug overdose crude death rate:

Model_1: crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate + binge_drink_rate:education_rate.

Model_2: crudedeath_rate ~ mean_poverty_rate + mean_unemployment_rate:mean_poverty_rate + education_rate:mean_poverty_rate + mean_poverty_rate:mean_smoke_rate

Model_3: crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_poverty_rate + binge_drink_rate:education_rate +mean_unemployment_rate:mean_poverty_rate + mean_poverty_rate:mean_smoke_rate

Model_4: crudedeath_rate ~ binge_drink_rate + education_rate + mean_smoke_rate + divorce_rate:education_rate

Model_5: crudedeath_rate ~ binge_drink_rate + divorce_rate + education_rate + mean_smoke_rate

In cross validation process, we are going to compare the cross-validated prediction error for these five model and their result with initial full model.

train_df = sample_n(overall_regression, 41)

test_df = anti_join(overall_regression, train_df)

set.seed(1)

cv_modeling_df =

crossv_mc(overall_regression, 5000) %>%

mutate(

train = map(train, as_tibble),

test = map(test, as_tibble)

) %>%

mutate(

model_1 = map(.x = train, ~lm(crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate + binge_drink_rate:education_rate, data = .x)),

model_2 = map(.x = train, ~lm(crudedeath_rate ~ mean_poverty_rate + mean_unemployment_rate:mean_poverty_rate + education_rate:mean_poverty_rate + mean_poverty_rate:mean_smoke_rate, data = .x)),

model_3 = map(.x = train, ~lm(crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_poverty_rate + binge_drink_rate:education_rate +mean_unemployment_rate:mean_poverty_rate + mean_poverty_rate:mean_smoke_rate, data = .x)),

model_4 = map(.x = train, ~lm(crudedeath_rate ~ binge_drink_rate + education_rate + mean_smoke_rate + divorce_rate:education_rate, data = .x)),

model_5 = map(.x = train, ~lm(crudedeath_rate ~ binge_drink_rate + divorce_rate + education_rate + mean_smoke_rate, data = .x)),

model_full = map(.x = train, ~lm(crudedeath_rate ~ binge_drink_rate + divorce_rate + mean_unemployment_rate + education_rate + mean_poverty_rate + mean_smoke_rate + binge_drink_rate * education_rate + mean_unemployment_rate * mean_poverty_rate + education_rate * mean_poverty_rate + education_rate * mean_smoke_rate + mean_poverty_rate * mean_smoke_rate, data = .x))

) %>%

mutate(

rmse_model_1 = map2_dbl(.x = model_1, .y = test, ~rmse(model = .x, data = .y)),

rmse_model_2 = map2_dbl(.x = model_2, .y = test, ~rmse(model = .x, data = .y)),

rmse_model_3 = map2_dbl(.x = model_3, .y = test, ~rmse(model = .x, data = .y)),

rmse_model_4 = map2_dbl(.x = model_4, .y = test, ~rmse(model = .x, data = .y)),

rmse_model_5 = map2_dbl(.x = model_5, .y = test, ~rmse(model = .x, data = .y)),

rmse_model_full = map2_dbl(.x = model_full, .y = test, ~rmse(model = .x, data = .y))

)

cv_modeling_output =

cv_modeling_df %>%

select(.id, starts_with("rmse")) %>%

pivot_longer(

rmse_model_1:rmse_model_full,

names_to = "model",

values_to = "rmse",

names_prefix = "rmse_"

) %>%

ggplot(aes(x = model, y = rmse)) +

geom_violin(aes(fill = model), alpha = 0.5)

cv_modeling_output

Comments:

All five models we select are better than the initial model. Model_5 may be slightly better than other four.

Conclusion

The better model to explain the variances (from 2015 - 2019) of crude death rate of drug overdose for fifty states and Washington D.C. is model 5.

Model_5: crudedeath_rate ~ binge_drink_rate + divorce_rate + education_rate + mean_smoke_rate

summary(stepwise_no_inter) %>% broom::tidy() %>% knitr::kable()| term | estimate | std.error | statistic | p.value |

|---|---|---|---|---|

| (Intercept) | 0.4646221 | 0.3226392 | 1.440067 | 0.1567697 |

| binge_drink_rate | -1.5395071 | 0.5520224 | -2.788849 | 0.0077258 |

| divorce_rate | -1.5061409 | 0.4402422 | -3.421165 | 0.0013370 |

| education_rate | 2.2751208 | 0.4591017 | 4.955592 | 0.0000107 |

| mean_smoke_rate | 2.5809509 | 0.5356159 | 4.818660 | 0.0000168 |

Predictor evaluation: Binge drink rate and divorce rate are negatively associated with crude death rate of drug overdose. This means states with the higher value of these predictors may have a lower death rate for drug overdose. Education rate and smoke rate are positively associated with crude death rate of drug overdose. This means that states which have higher proportion of population with bachelor’s degree or above, or states with higher prevalence of adult smokers may also have a higher rate of death induced by drug overdose. All four predictors are significant in this models.

summary(stepwise_no_inter) %>% broom::glance() %>% knitr::kable()| r.squared | adj.r.squared | sigma | statistic | p.value | df | df.residual | nobs |

|---|---|---|---|---|---|---|---|

| 0.5493662 | 0.5093098 | 0.1015012 | 13.71484 | 2e-07 | 4 | 45 | 50 |

Model description: Overall, this model explains around 50% of the difference of crude death rate of drug overdose among fifty states and Washington D.C.. However, since the sample size we use is quite small(50 + 1 = 51), this model might not be very reliable.